NEWS CENTER

In the past couple of years, Large Language Models (LLMs) have completely revolutionized people's perceptions of the field of Artificial Intelligence (AI). From enhancing natural language processing (NLP) capabilities to driving new applications in machine learning (ML), they have become a key force driving technological innovation. These models learn the complexity, context, and subtle nuances of language by processing massive datasets, enabling machines to perform advanced tasks such as language translation, content creation, sentiment analysis, and even demonstrating unprecedented potential in fields like image recognition and biopharmaceutical research. With the rapid advancement of technology, we have witnessed an exponential growth in model size, resulting in higher predictive performance and a broader range of applications, signaling the entry of AI technology into a new, more intelligent era.

However, as model sizes increase, running these high-performance models in resource-constrained environments has become a challenge. Edge computing devices, such as the Nvidia Jetson series, provide an efficient way to perform computations close to the data source, supporting real-time data processing and decision-making without relying on a central cloud. This presents new opportunities for deploying large models, especially in applications requiring fast response and processing capabilities, such as autonomous driving, remote monitoring, and smart cities.

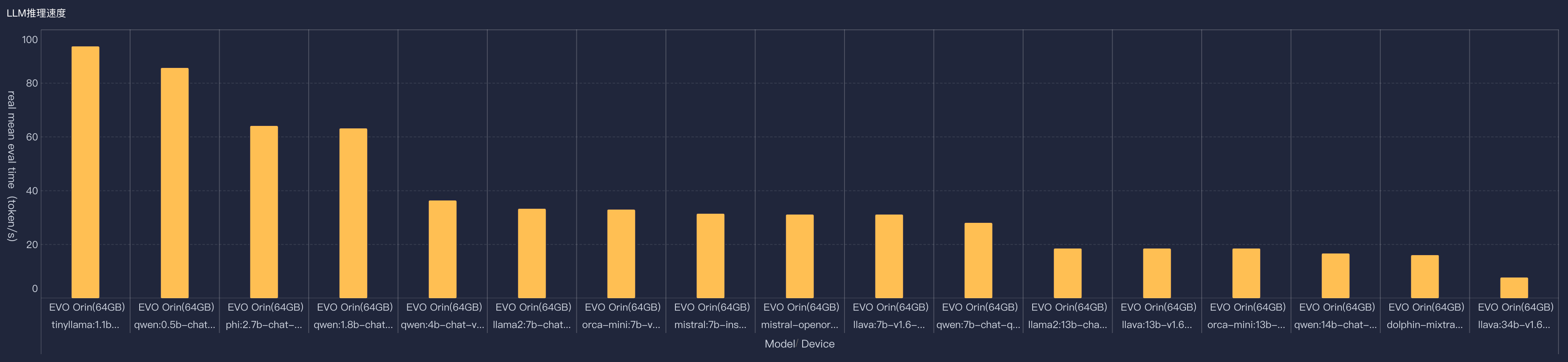

However, edge devices have limited computing and storage resources, requiring developers to optimize models to fit these constraints while maintaining performance and accuracy. It is in this context that MIIVII has undertaken an unprecedented evaluation effort, aiming to deeply understand and demonstrate the performance of various mainstream large models running on edge computing devices like Nvidia Jetson. Through these evaluations, we hope to reveal which models can effectively run on the Jetson platform and their performance during operation, providing practical guidance for developers and businesses to make more informed decisions when selecting and deploying large models, thus driving the development of edge computing and AI technology.

Through these evaluations, MIIVII not only demonstrates our commitment to driving technological innovation but also provides valuable resources and insights to the entire AI community, helping unlock the immense potential of edge computing in future AI applications.

In this evaluation, we mainly cover the following series of large models, focusing primarily on models quantized to 4 bits and 8 bits. (The order does not indicate ranking)

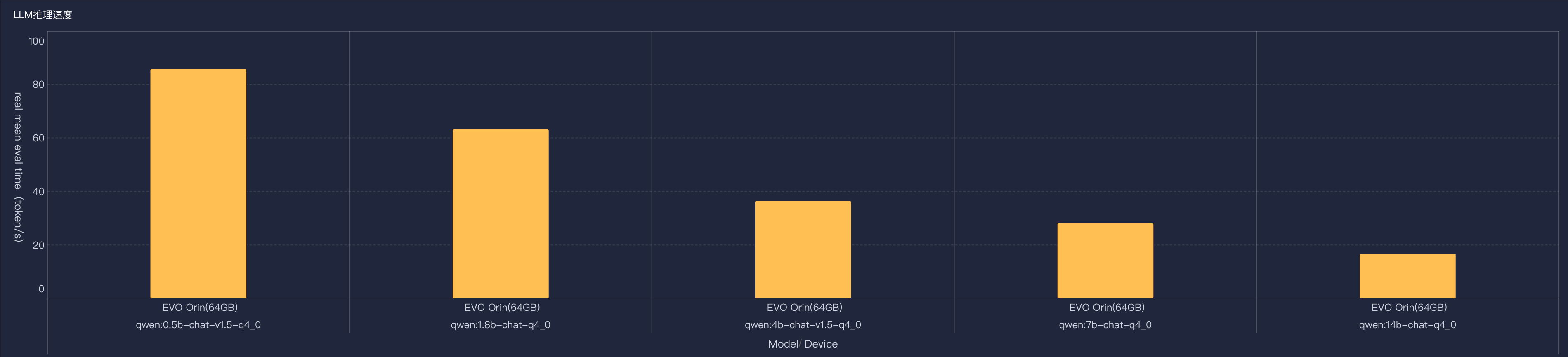

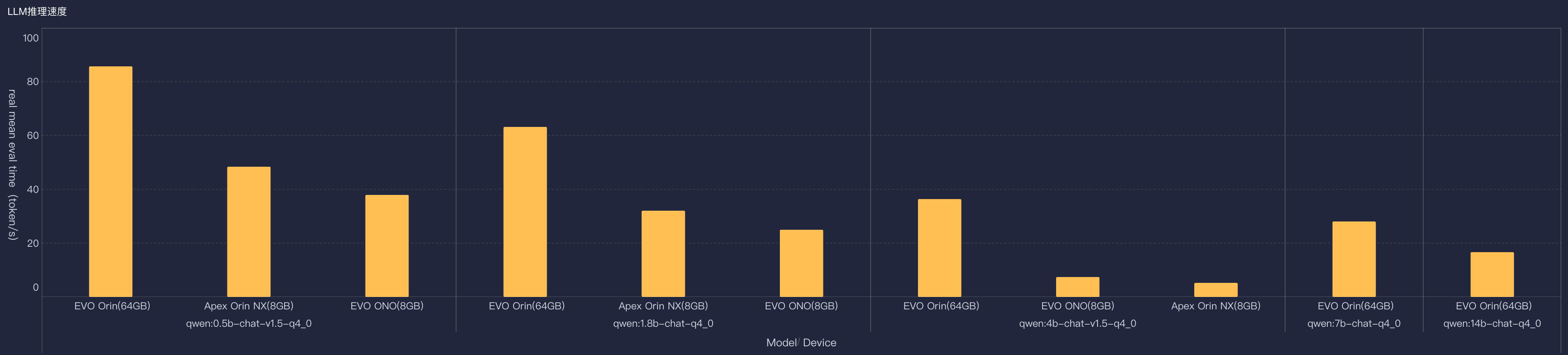

A. Tongyi Qianwen

B. LLAMA2

C. Gemma

D. Mistral

E. Llava

F. Phi

G. TinyLLAMA

B. Apex Orin NX (Orin NX 8GB)

C. Performance of Tongyi Qianwen 2 on the Orin Series

The results above are the test results of the model accelerated based on CUDA. TensorRT-LLM was not used for acceleration. In the near future, we will use TensorRT-LLM for further evaluation.

Through our testing, for Chinese language scenarios, the performance of the MIIVII model is better than that of other English-based baseline models. We recommend users to experience and try it out first.

MIIVII's comprehensive evaluation of various mainstream large models on the Nvidia Jetson platform marks our in-depth exploration and innovation in the fields of edge computing and artificial intelligence. This evaluation work not only demonstrates MIIVII's technical expertise and forward-looking vision but also holds significant importance and value for the entire industry.

Firstly, through these evaluations, we can provide developers and enterprises with a clear, objective performance reference framework, helping them make wiser decisions when selecting large models suitable for running on edge devices like Jetson. This has immeasurable value in accelerating the development and deployment of edge AI applications, enhancing the efficiency and effectiveness of the entire ecosystem.

Secondly, MIIVII's evaluation work also drives the development of large model optimization techniques. By conducting in-depth analysis of the performance of models in resource-constrained environments, we can not only identify the shortcomings of existing technologies but also explore and implement new optimization methods, thereby advancing large model technology and making it practical in a wider range of application scenarios.

In summary, MIIVII's comprehensive evaluation of large model performance on the Jetson platform not only reflects our relentless pursuit of technological innovation.

In the future, we will continue to explore and innovate, continuously advancing the development of edge computing and large model technology, contributing to the construction of a smarter, more efficient, and sustainable future.